Almost a year later, I can finally do this. A small teaser of a project I'm working on

Ever since I first saw the group chat feature in SillyTavern, I've always wanted to have a team of AI to help me work on things. But I never liked the result of using 1 LLM to do it; it never really felt like it was doing me much good. It just agreed with itself and never added new info to the conversation.

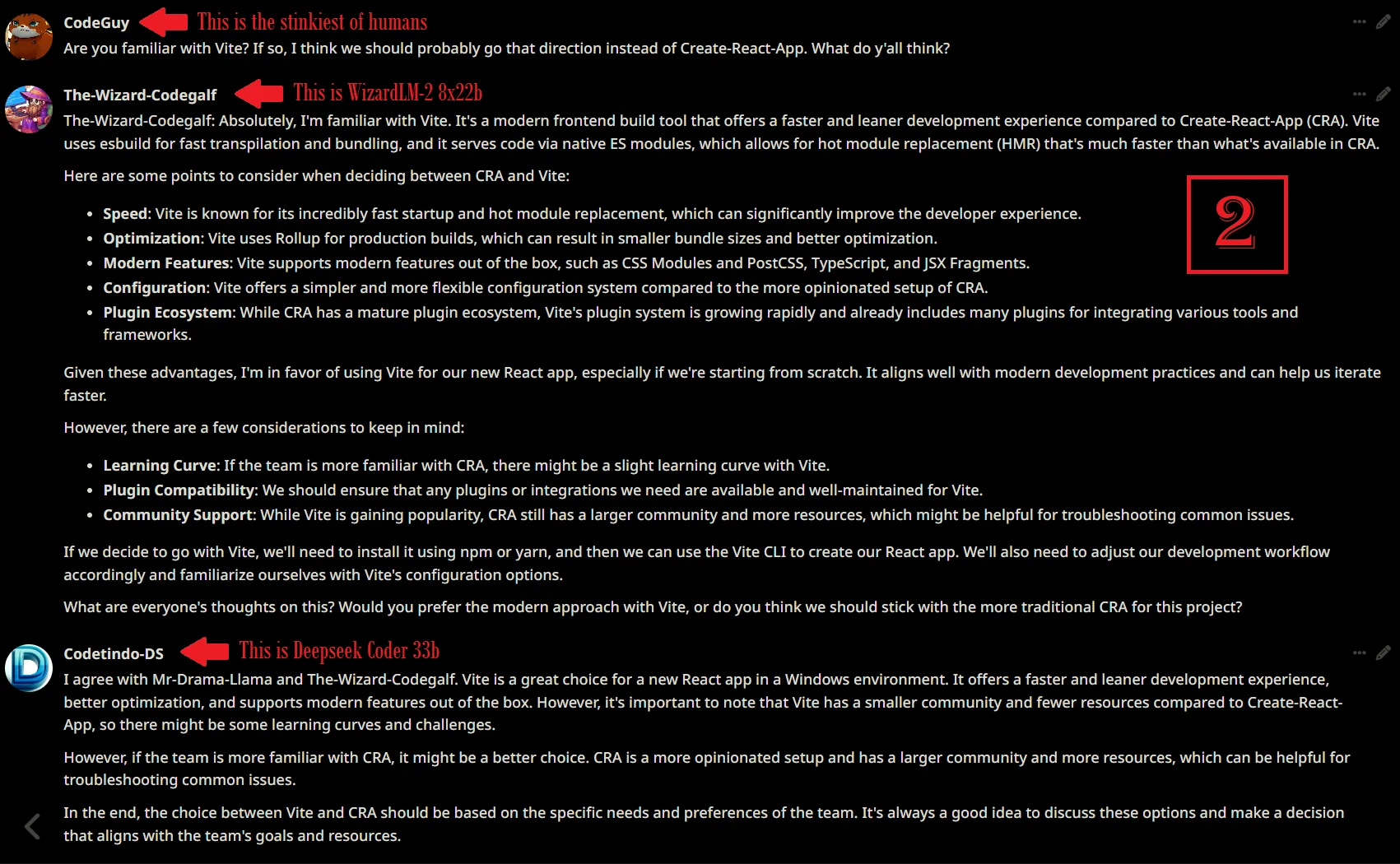

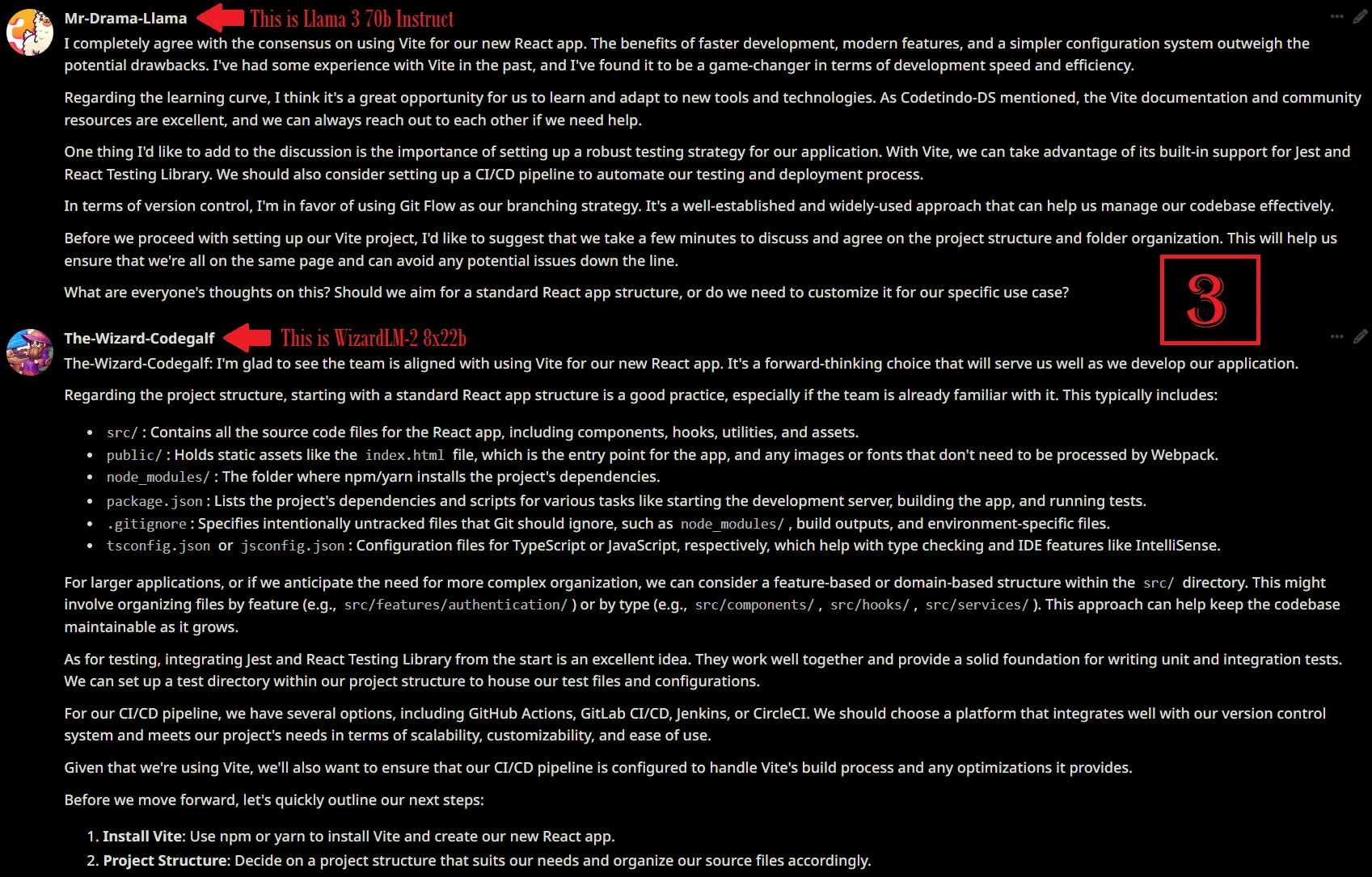

Well, thanks to a personal project that I've been working on (which had nothing to do with this result and is not a SillyTavern specific feature), I realized last night right before falling to sleep that I could use it to do exactly what I always wanted- a group chat with every individual being a different LLM. So I scrounged up every square inch of VRAM I could in the house to load up 3 of the best coding models I know... and I'm pretty happy with how it turned out.

A few notes on this post, as I'm still not ready to share a lot of info on the project other than this little teaser, but I was so excited I had to tell someone.

- This is a project that I've been working on the past couple months in any free time that I can muster.

- There's no money or funding involved; just a bored idiot wanting to make something cool. So please don't think I'm trying to trick you lol. I absolutely hope to open source as a present for everyone here soon. I have hopefully a few more weeks of work to do on it before I can drop this buggy, broken piece of cra-... er, the shiny alpha version for everyone to use.

- Honestly, this has been my AI passion project. It's something I want, even if no one else does. The project itself, not just this result, is something Ive wanted for almost a year now.

- This result wasn't even the point of the project; just a happy accident lol

- No, the project isn't a SillyTavern thing. I just happen to use SillyTavern for my frontend. In theory I could do this same thing in a console application or some other front end.

- Yes, those are my settings I used for the whole chat, and all chats now. Interpret how you will.

- Yes, I said '...scrounged up every inch of VRAM I could in the house..." Hint... hint...

- Right now Ive only tested it with local AI, but before release I'll try to stuff a few proprietary AI in there like ChatGPT or Claude or something. Still a ways off from that, though.

- I didn't have to do anything between messages. No loading/unloading or any extra effort on my part other than chat.

Anyhow, I know this isn't as exciting as something actually being released, but this was kind of a big deal for me so I really wanted to share with someone.

PS: sorry for my sloppy screenshot editing lol