My Personal Guide for Developing Software with AI Assistance - 2026 Edition

What's Changed Since 2024

So back in May of 2024 I wrote the first version of this little guide, at a time when agents were absolute crap and Wilmer was still in a state that couldn't even be called v0.01. Back then, most folks simply couldn't find value in AI assisted dev; the models made tons of errors, the quality was awful, etc.

When I first posted it on Reddit, it got a ton of interest. Folks were sharing on other sites, in other languages... it was a weird feeling, but I was glad folks found it helpful. Some variation of that workflow is what I used for years.

Until September 2025, to be exact.

Enter Claude Code. I decided to give it a try, because work was getting too heavy for me to put any time into personal projects, and I honestly didn't want to give up on Wilmer entirely. My old workflow simply took too much energy for what I had left over, and I finally was willing to take a quality hit of letting agents get involved.

I'll just say it: Claude Code has won me over, and I now find myself using it far more. However- I don't "Vibe Code" in the sense that most people think of it. I instead use it as a junior developer, where I handle all the architecting, planning and design up front via AI assisted chat workflows and heavy use of Deep Research functionality, leaving very little creative expression to the agents.

Even doing all of that up-front work (which generally takes a fair bit of time), I find that I get more value, and faster iterations, than folks who just vibe code and then give up in the final stretch because the bugs and maintainability are falling apart.

Wilmer is still a huge part of my daily LLM use, though. I use it primarily for chatbot workflows (decision trees and whatnot to better control the output quality), and also for quality gates when running small agent scripts. I've got a lot more plans for Wilmer, even as it gets up there in age. But for pure development? A huge chunk of what I used it for has been taken over by agents and online chatbots with Research functionality.

How I Code Today

First- coding with an agent is completely different than standard dev. In fact, IMO- if you're doing it right, then the vast majority of the time you will find yourself setting aside your developer hat for an architect hat. You are, essentially, acting as a team lead for a robot that is about equivalent to a competent junior dev (who can type faster than you can think lol). It is your job to direct that dev in such a way that they build something amazing.

For reference: I've built several internal apps this way, I've been using this for some work in Wilmer, and I have a new app I'm hoping to open source by Spring that is completely being built this way.

Rule Number 1: Use Deep Research. A Lot

Claude, Gemini, ChatGPT, etc all suffer from the same two core problems with dev:

- hallucinations

- outdated information that they don't realize is outdated.

This is where Deep Research comes in. When you start to come to a consensus with the AI, or it starts to give you some pretty in-depth recommendations, stop everything and ask it for a deep research prompt. Ask it to write the prompt looking to validate the information it gave, and then ask it to find developer opinions on that solution via blog posts, forum posts, or other online postings.

Once you get the prompt, open a new window, select the Research/Deep Research option, and give it the prompt. After the deep research finishes, copy the result and take it back to the original chat window. I generally paste it like this:

Below is the result of the deep research prompt

<research_1>

// words words words

</research_1>

Please use this and reconsider the above recommendations.

This ALWAYS results in it going "oops, wow, I was completely off base there. Lets go back and re-talk through this".

On average, when I'm building a small scale app, I'll do between 15-20 different deep researches. On big apps? I could easily hit 50+.

It also helps a lot to actually know the material. You can catch a lot of mistakes yourself by knowing how to do this work; so whenever you get free time, I recommend watching some tutorials on anything you find yourself lacking on. Your personal knowledge level is a huge gate to the quality of what the AI can put out.

Read the Sources

When you're reading the deep research, look at how it came to some conclusions. Even with DR, I've seen it make pretty big mistakes- misinterpreting something to mean something else. You have to be careful. Make sure you understand what the Deep Research is saying before you give it to the LLM.

Architect First

You need to understand what you're building, at a core level.

One critical weakness of Claude Code and a lot of coding agents is that it really can't architect right off the bat. A combination of mixing outdated information, not really fully grasping some security concepts, and having hallucinations along the way can result in some pretty wild results.

A lot of teams skip this step, which likely contributed a lot to the 95% of AI ventures fail stat. You see it all the time- developers complaining about unmaintainable software that was vibe-coded, falling apart at the seams with tons of critical bugs and security issues.

The reality is that the same thing would happen if you just tasked a pile of fresh entry-level junior devs to write a complex system, too. You have to think like a team lead and give them what they need to succeed in spite of that, so that they can succeed.

This means you can't just tell the AI "Go make me _____". You have to lead it there.

So How Do I Architect?

When architecting- I now make massive use of AI chat workflows. In the case of Claude, you'd want to move to the website version, as it has the enhanced thinking option and web search/Research available.

Break down the requirements, and get some recommendations. Vet the recommendations, and make counter proposals. When you start to narrow down on something, make sure to ask it to do web searches to confirm that the information is up to date, or do a deep research for a deeper confirmation. Then take that architecture, give back to Claude (or, even better, back to another LLM- just like the original guide), and do another path over.

Plan Everything

You're planning all of it. You're planning the UI. You're planning what languages to use, what tools to use, what design patterns to employ. You're even giving it example code to ensure that everything it writes is uniform. You're planning how you'll handle code quality, CI/CD, deployments, how pieces will talk to each other. You're planning the testing strategy and the database setup. You're planning the security, the logging. Even the naming schemes.

All of it. You're planning all of it.

The goal here is that when you finish the Architecture step, there's no question what the LLM is building. It knows how the project is structured, down to the folder and even script/class level. It knows how the code should work, what to use for it, and everything else. There's very little creative expression left for that bot.

If nothing else, let the LLM double check your work if you do it all yourself without AI assistance. It is honestly such a fantastic tool for being a "second set of eyes".

SAVE YOUR PROMPTS

You'll reuse prompts a lot. Save the description of what you want from the project. Save your requirements. Save this architecture that you get back. Keep each of them in little tags.

Consider the below project description:

<description>

</description>

And here are some of the features I'm aiming to achieve:

<features>

</features>

Keeping those blocks, so that you can re-use them in new prompts, helps so much. I save tons of time with it.

Break Down the Work Into Sections

I always break the project down into logical chunks; think sprint iterations. This helps for a couple of reasons:

- A) It helps me review the overall decisions better. Now I can take this back to the web version for one final code review, changes, etc without rewriting the WHOLE project

- B) It makes life easier for Claude Code. The files are smaller, and it's able to compact its context window between features.

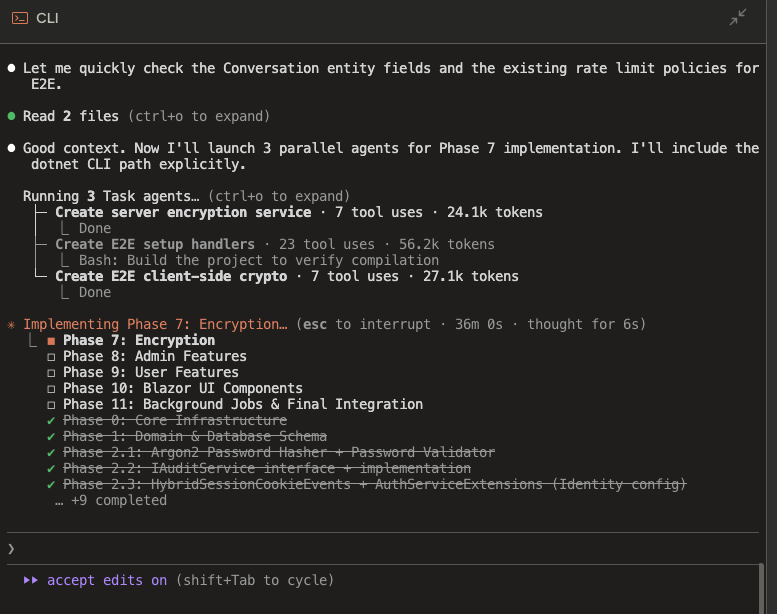

An example from a little personal project I'm working on during the weekends:

When I break the work apart like that, I also always make sure to have one file for the "Project Overview". This gives a high level overview of the project, my goals, the features, and what files exist and what they contain. This helps all the LLMs keep track of progress, understand how the pieces fit together, etc.

Set the Agents Loose

Once I have the full architecture laid out- I let the bots run wild.

For me, a smaller project can take a few hours, and burn through my 5x Max usage a few times. I always use Opus 4.5 for it, so that doesn't help either, but it's worth it. This means that a smaller project can take a couple of days for this step, waiting for usage to come back.

Code Reviews

Ah... welcome to being a real senior dev. Our favorite thing- code reviews (sarcasm...).

You need to know what it wrote, down to a granular level. If it put a security bug in the code? YOU put a security bug in the code. You, like any senior dev or team lead or dev manager, are responsible for the code you commit. When a bug hits production, the agent didn't fail. You failed.

This part takes time, and it can be grueling, but with a bit of patience and the help of some AI chatbots, you can get through this alright. Take your time.

Among other things, you're looking for:

- Obvious failings to meet the specs of your architecture

- Security flaws

- Really really bad or inefficient code. Did it create unnecessary loops? Tons of duplication? Did something that just doesn't make sense? Your job is to find that, and call it out.

Once I've identified the issues, I then get them documented into a new .md file, and send the agent back to work.

I generally keep looping like this until I have a good, working feature or project.

Use Linters, Quality Scanners, etc if you can.

I find that SonarQube and the like can help a lot here. CI/CD pipelines can help as well for finding out of date libraries, or even just dependabot on github; especially if I missed where the AI recommended a library/package version that's like 2 years old and has tons of CVEs lol.

My CLAUDE.md File

I always specify a few things in my CLAUDE.md (though I usually word it differently than below):

- Minimal in-line commenting. Explain 'why' on complex code, but never explain 'what'. The code should be self explanatory.

- No emojis or flowery marketing language in the docs or comments

- Always keep the documentation up to date (and always write documentation in a docs folder)

- Never modify code unnecessarily. Code should always be modified with an express purpose; do not apply 'little fixes'. If you see something that needs to be fixed, put down a TODO.

- Always run unit tests. Unit tests should always pass

- Never modify a unit test to make it pass, unless there is specific justification- such as the unit test now being outdated functionality. Always assume the unit test is authoritative and correct the code to match the test.

Do The Same for the IT Portions

If you have to deploy - take care when trusting the LLM to help you figure out how. It will probably be wrong in a lot of what it tells you. Just accept that. Go in saying the words "What it tells me is going to be wrong". Challenge everything. Do Deep Researches like your life depends on it. Watch tutorials. Read guides. LEARN.

Don't do things you don't understand. That's how people lose money.

The End?

That's pretty much it. It's a lot of work, and it's definitely not 10x productivity, but I get so much better results than just having unmanaged agents writing my code.

One day this might change. 2 years ago I said I'd never use agents. Here I am. 2 years from now I may say "I don't need to do this anymore; it now architects as well as I do from day 1". That's fine, too.

But for right now- as developers, quality is our job and our goal. Using AI is amazing for development, and definitely speeds things up, but we have to make sure to use it responsibly and focus on quality, security and reliability above all.

I will always push back when I need to if someone is pressing me to "go faster". Gabe Newell paraphrased a decades old quote quite well: “Late is just for a little while. Suck is forever." They won't remember that I folded and rushed to meet their deadline; they'll remember that I delivered them unusable crap. And so will I.